Introduction

Imagine unlocking your front door, starting your car, and paying for your morning coffee—all without reaching for your wallet or keys. This might sound like something out of a sci-fi movie, but it’s a reality for a growing number of people who have embraced microchipping. These tiny implants, typically the size of a grain of rice, are embedded under the skin and offer a new level of convenience in an increasingly digital world. Companies and individuals alike are experimenting with this technology, touting it as the next step in human evolution.

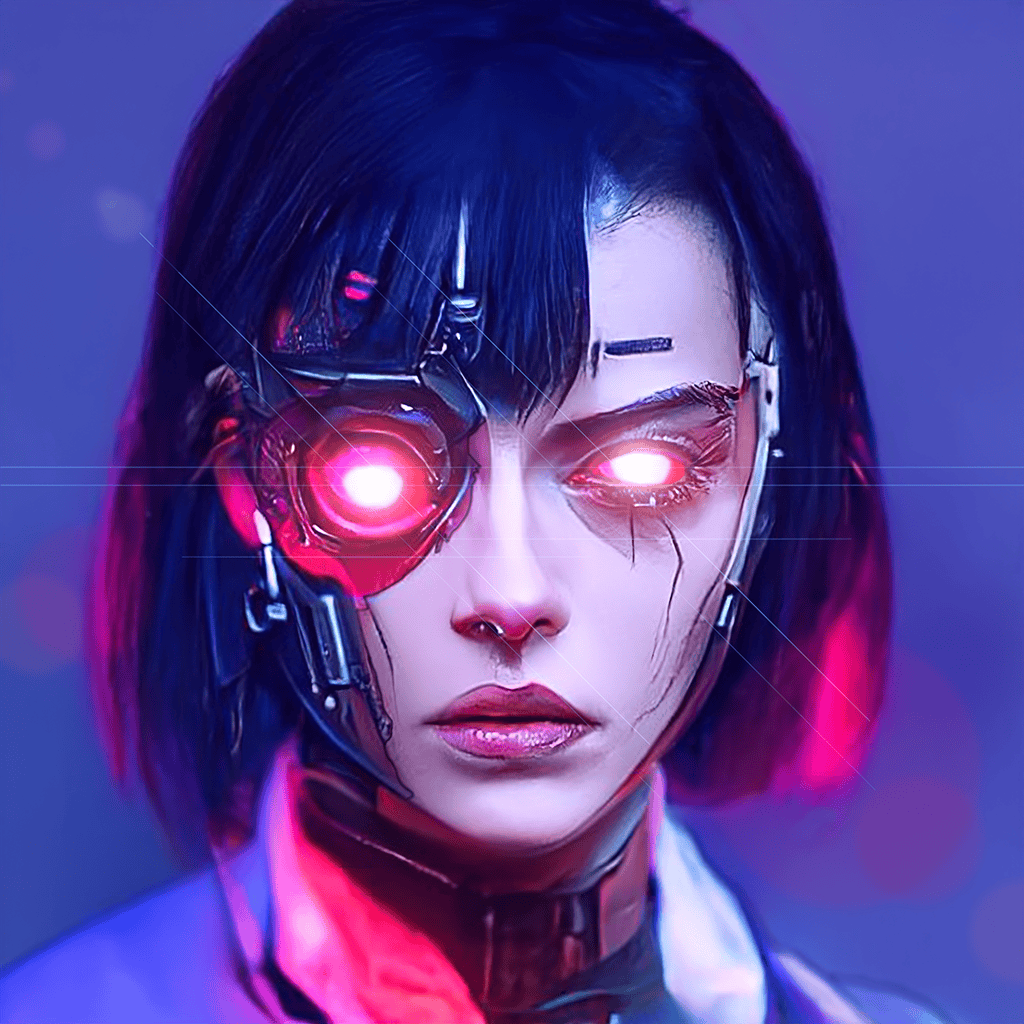

But as with any innovation, microchipping raises significant questions. Is this technology a glimpse into a future where humans seamlessly integrate with machines, or does it open a Pandora’s box of ethical and privacy concerns? The idea of embedding microchips in the human body evokes strong reactions—some see it as a progressive biohack that could revolutionize our lives, while others view it as a dangerous intrusion that could lead to loss of autonomy and control.

In this article, we will explore the complex world of microchipping, examining both its potential as a groundbreaking technology and the challenges that could render it a dead end. From convenience and security to privacy issues and ethical dilemmas, we’ll delve into whether microchipping truly represents a step forward for humanity or a path fraught with risks.

Historical Background of Microchipping

The concept of microchipping has its roots in the 20th century, with early applications centered on tracking animals. In the 1980s, veterinarians began implanting microchips in pets to help identify them if they were lost. These small devices, typically about the size of a grain of rice, used Radio Frequency Identification (RFID) technology to store a unique identification number that could be read by a scanner. This simple yet effective use of microchipping quickly became popular, and by the 1990s, millions of pets worldwide were chipped, helping reunite countless animals with their owners.

As the technology matured, innovators began to explore the potential for human applications. In 1998, British scientist Kevin Warwick became one of the first people to have a microchip implanted in his body as part of a groundbreaking experiment. Warwick’s chip allowed him to control lights, doors, and other devices in his office simply by being present, showcasing the potential for microchipping to integrate with everyday life. His experiment marked the beginning of a new era where microchips were no longer just for tracking pets—they were now seen as a tool for enhancing human capabilities.

In the early 2000s, companies and governments started to take notice of this technology. In 2004, the U.S. Food and Drug Administration (FDA) approved the use of implantable microchips in humans for medical purposes. These chips, known as VeriChips, were designed to store medical records that could be accessed quickly in an emergency. Despite the promise of quicker medical care, the VeriChip faced significant public backlash due to concerns over privacy and security, and its adoption remained limited.

Over the following decade, microchipping technology continued to evolve. RFID chips became more advanced, with some capable of storing not just identification numbers but also data for various applications. In countries like Sweden, microchipping began to gain popularity among early adopters who used the chips for everything from opening doors to paying for public transportation. These advancements fueled a growing interest in the potential of microchipping as a convenient, all-in-one solution for daily tasks.

However, despite these advancements, the technology also faced significant challenges. Concerns over privacy, data security, and health risks have slowed its adoption, particularly in regions where skepticism of government and corporate surveillance is high. As the debate around microchipping continues to evolve, the technology stands at a crossroads—balancing between its potential for convenience and the ethical questions it raises about the future of human autonomy.

Today, microchipping remains a niche technology, with a dedicated but small group of enthusiasts. Yet, its journey from animal tracking to human enhancement raises important questions about where this technology could lead us next. Whether microchipping will become a widespread tool for everyday convenience or remain a controversial experiment is still an open question—one that the rest of this article will seek to address.

The Progressive Side of Microchipping

Microchipping has gained traction among tech enthusiasts, early adopters, and futurists who see it as a natural progression in the integration of technology with the human body. This section explores the various benefits and potential that microchipping holds, portraying it as a progressive step forward in biohacking and human enhancement.

1. Convenience and Integration

One of the most compelling arguments for microchipping is the convenience it offers. By embedding a small chip under the skin, users can streamline numerous daily tasks. For example, RFID or NFC-enabled microchips can replace traditional keys, allowing users to unlock doors with a simple wave of the hand. Similarly, these chips can store payment information, enabling contactless transactions without the need for a wallet or phone. This all-in-one functionality is particularly appealing in a world where we are constantly juggling multiple devices, cards, and passwords.

Beyond just payments and access, microchips can also integrate with smart home devices, enabling users to control lights, thermostats, and security systems with ease. For tech enthusiasts, this level of integration represents the cutting edge of convenience, where technology seamlessly enhances daily life without the need for external devices.

2. Security Benefits

Microchips offer significant security advantages, particularly in identity verification and access control. For instance, biometric data stored on a microchip can be used to verify a person’s identity more securely than traditional methods like passwords or ID cards, which can be stolen or forged. In high-security environments, such as government facilities or corporate offices, microchips can ensure that only authorized individuals have access to sensitive areas.

Additionally, microchips can help reduce identity theft. Unlike credit cards or identification documents, a microchip embedded under the skin is difficult to lose, steal, or replicate. This makes it an attractive option for those concerned about the security of their personal information in an increasingly digital world.

3. Medical Applications

One of the most promising aspects of microchipping is its potential in the medical field. Implantable chips can store vital medical information, such as allergies, blood type, and pre-existing conditions, which can be accessed by healthcare professionals in an emergency. This can be life-saving in situations where patients are unable to communicate their medical history.

Beyond emergency use, microchips are also being explored as tools for monitoring chronic conditions. For example, chips could be used to continuously monitor glucose levels in diabetics or track heart rate and other vital signs in real-time. This data could then be transmitted to healthcare providers, enabling proactive management of health conditions and reducing the need for invasive procedures.

4. Technological Innovation and Transhumanism

Microchipping is often seen as part of the broader movement of transhumanism—the idea that humans can enhance their physical and cognitive abilities through technology. For proponents of this philosophy, microchips represent a step toward a future where the boundaries between humans and machines blur, leading to new possibilities for human evolution.

Technological innovation in microchipping is rapidly advancing. Researchers are developing chips that can perform more complex functions, such as storing larger amounts of data, interacting with the internet of things (IoT), and even communicating with other devices wirelessly. This innovation is driving interest in microchipping as a means of not just enhancing daily life but also pushing the limits of what the human body can achieve.

5. Examples of Successful Implementation

Around the world, there are already examples of microchipping being successfully implemented on a small scale. In Sweden, thousands of people have opted to get microchips that allow them to pay for public transportation, enter secure buildings, and even store contact information. These early adopters often praise the convenience and simplicity that microchipping offers, highlighting its potential for wider adoption.

In the workplace, some companies have also embraced microchipping as a way to streamline operations. Employees at certain firms can use implanted chips to access secure areas, log into computers, and even purchase food at the company cafeteria. While still a niche practice, these examples demonstrate that microchipping can be successfully integrated into everyday life, offering tangible benefits for those who choose to adopt it.

These advancements illustrate the progressive potential of microchipping, particularly for those who value convenience, security, and cutting-edge technology. However, as promising as these developments may be, they also come with challenges and risks that cannot be ignored. The next section will delve into the drawbacks and ethical concerns that surround microchipping, providing a balanced perspective on this controversial technology.

The Drawbacks and Ethical Concerns

While microchipping offers several potential benefits, it also raises significant concerns that challenge its viability and widespread acceptance. From privacy and health risks to ethical dilemmas and technological limitations, this section examines the darker side of microchipping, exploring why some view it as a potential dead end.

1. Privacy Issues

One of the most significant concerns surrounding microchipping is the potential erosion of privacy. Microchips, particularly those with RFID or NFC capabilities, can be scanned and tracked by various devices. This raises fears that individuals could be monitored without their consent, whether by governments, corporations, or even hackers. The idea that someone could potentially track your movements or access personal data stored on the chip is a chilling prospect for many.

Moreover, the data stored on microchips, such as identification information or medical records, could be vulnerable to cyberattacks. If the technology is not adequately secured, it opens the door to potential data breaches, identity theft, and unauthorized surveillance. In a world where data privacy is already a significant concern, the idea of having personal information embedded in your body amplifies these fears.

2. Health Risks

Although microchips are generally considered safe, there are still potential health risks associated with implanting foreign objects under the skin. Some people may experience allergic reactions to the materials used in the chip, leading to irritation or infection. In rare cases, the body may reject the implant altogether, causing complications that require medical intervention.

Additionally, there is the long-term uncertainty about the impact of having a microchip in the body. While current evidence suggests that microchips are safe for extended periods, the technology is still relatively new, and long-term studies are limited. Concerns about the potential for unknown side effects, such as the development of tumors or other health issues, continue to linger in the minds of skeptics.

3. Loss of Autonomy

Microchipping raises important questions about personal autonomy and control over one’s own body. While voluntary microchipping may seem harmless, there are concerns that it could pave the way for more coercive practices. For example, some employers might pressure employees to get chipped for convenience or security reasons, creating a slippery slope where individuals feel compelled to accept the technology against their will.

The potential for government-mandated microchipping also raises alarms. In a dystopian scenario, microchips could be used to monitor and control populations, infringing on individual freedoms. Even in less extreme cases, the idea of being “required” to get chipped for access to certain services or benefits challenges the fundamental right to bodily autonomy.

4. Ethical Dilemmas

The ethical implications of microchipping are vast and complex. For some, the idea of implanting technology into the human body crosses a moral line, raising concerns about the commodification of the human body. Microchipping challenges traditional notions of what it means to be human, as it blurs the boundary between organic life and machine, potentially leading to societal divisions between those who embrace the technology and those who resist it.

There is also the ethical question of consent, particularly in vulnerable populations. For example, children, elderly individuals with dementia, or those with mental disabilities might be chipped without fully understanding the implications. This raises concerns about exploitation and the potential for abuse, especially if the technology is used to track or control these individuals.

5. Technological Limitations

Despite its potential, microchipping technology still faces several limitations that hinder its widespread adoption. For one, the data storage capacity of current microchips is relatively small, restricting the amount of information they can hold. This limitation makes it difficult to fully replace other forms of identification or data storage, requiring users to rely on additional devices or systems.

Additionally, the infrastructure needed to support microchip integration is not yet universal. For microchipping to reach its full potential, there needs to be widespread adoption of compatible scanners, payment systems, and security protocols. Without this infrastructure in place, microchipping remains a niche technology with limited practical applications for most people.

Furthermore, microchips currently need to be updated or replaced as technology advances. Unlike other digital devices that can be easily upgraded, replacing a microchip requires a surgical procedure, which could deter individuals from adopting the technology in the first place.

These drawbacks and ethical concerns highlight the challenges that microchipping must overcome to be considered a viable, long-term solution. While the technology offers undeniable advantages, it also poses significant risks that cannot be ignored. As we continue to explore the potential of microchipping, it is crucial to address these concerns to ensure that the technology is developed and implemented in a way that respects privacy, autonomy, and ethical principles.

V. The Public Perception and Cultural Impact

Public perception of microchipping is deeply divided, with opinions ranging from enthusiastic support to staunch opposition. This section explores how different groups view the technology, how it is represented in media and pop culture, and the broader cultural and social implications it carries.

1. Varied Opinions: Enthusiasts, Skeptics, and Opponents

Microchipping evokes strong reactions across different segments of society. Enthusiasts—often tech-savvy individuals or those interested in biohacking—view microchipping as an exciting innovation. For them, the ability to streamline everyday tasks, enhance security, and integrate with technology represents the future of human evolution. They see it as a logical step in the growing trend of wearable technology and body augmentation.

On the other hand, skeptics approach microchipping with caution. They recognize the potential benefits but are concerned about the risks—particularly around privacy, security, and health. These individuals may see the technology as intriguing but not yet ready for mainstream adoption, preferring to wait until the risks are better understood and addressed.

Finally, opponents of microchipping reject the technology outright, often on ethical, religious, or philosophical grounds. Many view it as an invasive form of surveillance that threatens individual freedom and autonomy. Some religious groups see microchipping as a step toward dystopian control, while others are concerned about the potential for government or corporate overreach.

2. Media Representation: Dystopia vs. Utopia

The media plays a significant role in shaping public perception of microchipping, often presenting it in extreme terms. Dystopian narratives dominate popular culture, with films, TV shows, and books frequently depicting a future where microchipping is used to control and oppress people. From movies like Gattaca and Black Mirror episodes to novels like 1984, microchipping is often portrayed as a tool of authoritarian regimes, used to monitor, manipulate, and even punish individuals.

These dystopian depictions resonate with the public’s fears about surveillance and loss of autonomy, reinforcing negative perceptions of the technology. However, there are also utopian portrayals, where microchipping is shown as a means of enhancing human potential and improving lives. In these narratives, technology and humanity coexist harmoniously, with microchips enabling greater convenience, safety, and health outcomes.

This dichotomy in media representation reflects the broader societal ambivalence toward microchipping. While the potential for a utopian future exists, the fear of a dystopian reality looms large in the public consciousness.

3. Social Implications: Division and Acceptance

Microchipping also has the potential to create social divisions. As with any emerging technology, early adopters may embrace it enthusiastically, while others resist. This could lead to a divide between those who are chipped and those who are not, potentially creating new social dynamics. In extreme cases, it could even result in discrimination against those who refuse to get chipped, especially if the technology becomes more integrated into essential services or employment.

Additionally, there is the question of accessibility and inequality. If microchipping becomes a symbol of status or privilege, it could exacerbate existing inequalities, with wealthier individuals having greater access to advanced technology and its benefits. This raises concerns about a future where technology-driven disparities deepen, creating a new form of digital divide.

Despite these concerns, there are also signs of growing acceptance in certain circles. In some countries, particularly in Europe, microchipping is gaining popularity, with early adopters using it for practical purposes like accessing public transportation or making payments. As more people experience the convenience of microchipping firsthand, it’s possible that societal acceptance will grow, leading to wider adoption over time.

4. Global Trends: Cultural Differences and Regulations

The perception and adoption of microchipping vary widely across different countries and cultures. In Sweden, for example, microchipping has been embraced by thousands of people, driven by a culture that is generally open to technological innovation and convenience. Here, microchips are often seen as a natural extension of the country’s strong digital infrastructure, with people using them for everyday tasks like entering secure buildings and paying for goods.

In contrast, countries with a greater emphasis on privacy and individual rights, such as the United States, tend to be more skeptical. Concerns about government surveillance and corporate control fuel resistance to the technology, and adoption rates are much lower. Additionally, religious and cultural beliefs play a significant role in shaping attitudes toward microchipping, with some groups viewing it as morally or ethically unacceptable.

The regulatory landscape also differs by region. In some countries, laws are being developed to address the ethical and privacy concerns associated with microchipping. For example, in the United States, certain states have passed legislation that prohibits employers from requiring employees to get chipped. Meanwhile, other countries are more laissez-faire, allowing individuals and companies to adopt the technology as they see fit, with minimal government oversight.

These global trends highlight the diverse responses to microchipping and suggest that its future may unfold differently depending on the cultural and regulatory context. Understanding these differences is key to predicting how microchipping will evolve and whether it will become a mainstream technology or remain a niche innovation.

Public perception and cultural attitudes are critical in shaping the future of microchipping. While some see it as a forward-looking technology with the potential to transform daily life, others fear its implications for privacy, autonomy, and society as a whole. As microchipping continues to develop, its success or failure may ultimately depend on how these cultural and societal concerns are addressed.

The Future of Microchipping: What Lies Ahead?

As we stand at the crossroads of technology and humanity, the future of microchipping remains uncertain. Will it evolve into a ubiquitous tool that seamlessly integrates into our lives, or will it be abandoned as an experiment that raised more concerns than it solved? This section explores the potential paths forward for microchipping, considering advancements in technology, shifts in societal attitudes, and the regulatory landscape that will shape its destiny.

1. Technological Advancements

The future of microchipping will largely depend on how the technology itself evolves. Advancements in microchip capabilities—such as increased data storage, enhanced security features, and the ability to integrate with more devices—could make microchipping more attractive and practical for the average person. Innovations like biocompatible materials that reduce health risks and battery-free designs that last a lifetime could address current concerns and pave the way for broader adoption.

Furthermore, developments in biohacking and biotechnology may allow microchips to do much more than they currently can. For instance, future chips could potentially monitor health conditions in real-time, detect illnesses before symptoms appear, or even enhance cognitive and physical abilities. These advancements could make microchipping not just a convenience but a necessity for those seeking to optimize their health and performance.

2. Societal Shifts

As technology continues to evolve, so too will societal attitudes. While microchipping is currently viewed with skepticism by many, generational changes could lead to a more accepting public. Younger generations, who have grown up with technology integrated into every aspect of their lives, may be more open to the idea of implanting technology into their bodies.

Moreover, as more people experience the benefits of microchipping firsthand—whether through convenience, security, or health improvements—public perception may shift. What was once seen as invasive or unnecessary could become normalized, much like the way smartphones and wearable devices have become ubiquitous in recent years. Cultural trends that emphasize personalization and technological enhancement could also contribute to greater acceptance of microchipping as a tool for self-improvement and empowerment.

3. Ethical and Regulatory Developments

The future of microchipping will also be shaped by ethical debates and regulatory decisions. As concerns about privacy, autonomy, and surveillance continue to grow, there will be increasing pressure on governments and institutions to create frameworks that protect individuals from potential abuses of microchip technology. This could include laws that regulate who can access the data stored on chips, how it can be used, and who has the right to refuse a chip without facing discrimination or exclusion.

International standards and guidelines may also emerge to ensure that microchipping is implemented in a way that respects human rights and ethical principles. For example, regulations could require informed consent for all microchipping procedures, prohibit the use of chips for invasive tracking or surveillance, and mandate transparency in how data collected by microchips is stored and used. Industry self-regulation could also play a role, with tech companies establishing best practices to build trust with consumers.

4. Potential Scenarios: Utopia or Dystopia?

The future of microchipping could unfold in a number of ways, depending on how these factors play out. In a utopian scenario, microchipping becomes a widely accepted tool that enhances daily life, improves healthcare, and strengthens security without compromising privacy or autonomy. People voluntarily adopt the technology, confident that their data is protected and their rights respected. Microchips become a seamless part of the digital ecosystem, empowering individuals to take control of their identities and health.

In contrast, a dystopian scenario could see microchipping used for coercive purposes, with governments or corporations mandating implants for access to services or employment. Privacy concerns are ignored, and people are tracked and monitored without their consent. The technology becomes a tool of control rather than empowerment, leading to widespread resistance and social division.

A middle-ground scenario is perhaps the most likely, where microchipping remains a niche technology, adopted by some but not all. It finds specific applications in certain industries, such as healthcare and security, but fails to gain widespread acceptance due to lingering concerns about privacy, ethics, and health risks. In this scenario, microchipping coexists with other forms of technology, offering benefits to those who choose it while remaining optional for the majority.

5. Conclusion: The Choice Ahead

The future of microchipping is far from set in stone. It will be determined by a complex interplay of technological innovation, societal values, and regulatory oversight. As we move forward, it is crucial that we continue to ask tough questions about the implications of embedding technology into our bodies. How can we ensure that microchipping enhances human life without compromising our privacy or autonomy? What safeguards need to be in place to prevent abuse?

Ultimately, the future of microchipping will depend on the choices we make as a society. Whether it becomes a progressive biohack that propels us into a new era of human enhancement or a dead end that raises more problems than it solves is up to us. As we stand on the brink of this technological frontier, we must carefully consider the risks and rewards, ensuring that the path we choose is one that respects our fundamental rights and values as human beings.

Conclusion: Microchipping—A Progressive Biohack or a Dead End?

Microchipping sits at the intersection of technological innovation and ethical debate, presenting a complex picture of potential benefits and significant concerns. As we’ve explored throughout this article, microchipping offers a glimpse into a future where technology is seamlessly integrated into the human body, bringing with it the promise of enhanced convenience, improved security, and revolutionary medical applications. Yet, alongside these advantages lie profound risks—privacy invasion, health complications, loss of autonomy, and deep ethical dilemmas that question the very essence of what it means to be human.

1. Weighing the Benefits and Risks

The debate over microchipping is ultimately a balancing act between embracing the opportunities it presents and safeguarding against the dangers it poses. On one side, proponents argue that microchipping could transform everyday life, streamlining tasks and enhancing personal security. In the medical field, it could save lives and revolutionize healthcare. For those invested in the idea of transhumanism, microchipping is a step toward the future of human evolution.

On the other side, critics caution against the potential for abuse, highlighting how easily microchipping could be misused by corporations, governments, or even hackers. The prospect of having personal data stored under one’s skin raises serious privacy concerns, while the potential health risks and ethical implications demand careful consideration.

2. The Cultural and Social Impact

Public perception and cultural attitudes toward microchipping will play a crucial role in its future. While some cultures and communities may embrace the technology, others may resist it, leading to social divisions and disparities in access. Media portrayals, which often oscillate between utopian and dystopian visions, will continue to shape how people view microchipping, influencing whether it is seen as a tool for empowerment or a symbol of control.

The societal impact of microchipping also cannot be ignored. As with any technological advancement, there is the potential for both positive change and unintended consequences. The choices we make about how microchipping is implemented and regulated will determine whether it becomes a force for good or a source of division and conflict.

3. Looking Ahead: The Path Forward

As we contemplate the future of microchipping, it is clear that this technology is at a crossroads. Whether it evolves into a widely accepted tool or fades into obscurity depends on how we address the challenges it presents. For microchipping to be successful, it must earn public trust by demonstrating its safety, security, and respect for individual rights.

Regulatory frameworks will need to be established to protect users from potential abuses, ensuring that microchipping remains voluntary and that data privacy is upheld. Technological advancements must continue to address the current limitations and concerns, making microchips safer, more secure, and more versatile.

4. A Progressive Biohack or a Dead End?

In the end, the question of whether microchipping is a progressive biohack or a dead end does not have a simple answer. It is both—and neither—depending on how it is developed, adopted, and regulated. Microchipping holds immense potential to improve our lives, but it also carries significant risks that could undermine its promise.

As we navigate this complex and evolving landscape, it is essential that we remain vigilant, thoughtful, and proactive in our approach. The future of microchipping is not predetermined; it is a choice that we, as a society, must make. By carefully weighing the benefits and risks, and by putting in place the necessary safeguards, we can ensure that microchipping serves as a tool for progress rather than a step toward a dystopian future.

In making this choice, we will determine whether microchipping will be remembered as a bold leap forward in human innovation—or as a cautionary tale of technology gone too far. The decision lies in our hands, and it is one that will shape the course of our technological and ethical journey for years to come.